Addressing AR/VR/MR/XR Device Component Quality

Augmented, virtual, and mixed reality (AR/VR/MR) have been booming in recent years, with an ever-increasing array of VR headsets, smart glasses, and AR/MR industrial devices in use. The market for these systems, referred to collectively as “XR,” is expected to continue growing rapidly at a CAGR of 46% through 2025.1

To make these devices, engineers have applied multiple display types, used a range of technologies, and created a wide array of optical systems. As a result, XR devices are incredibly diverse in terms of architecture, form, and function, with designs intended to meet many different application goals. Broadly speaking, “Three categories of head mounted displays have emerged: smart glasses, or digital eyewear, as an extension of eyewear; virtual reality headsets as an extension of gaming consoles; and augmented and mixed reality systems as an extension of computing hardware.”2

Three types of head-mounted displays (HMDs) and their antecedents. (Image source: Electro Optics)

For example, a pair of AR smartglasses intended for consumer use may be designed to minimize hardware size and weight, typically using smaller displays or optical modules that, in turn, limit the display field of view (FOV). On the other hand, a VR headset intended for gaming may be designed to maximize FOV and boost visualization performance using high-resolution and/or varifocal displays. An MR headset developed for military use may be designed to optimize durability and functionality, integrating with helmet hardware to fully and securely surround the user’s head.

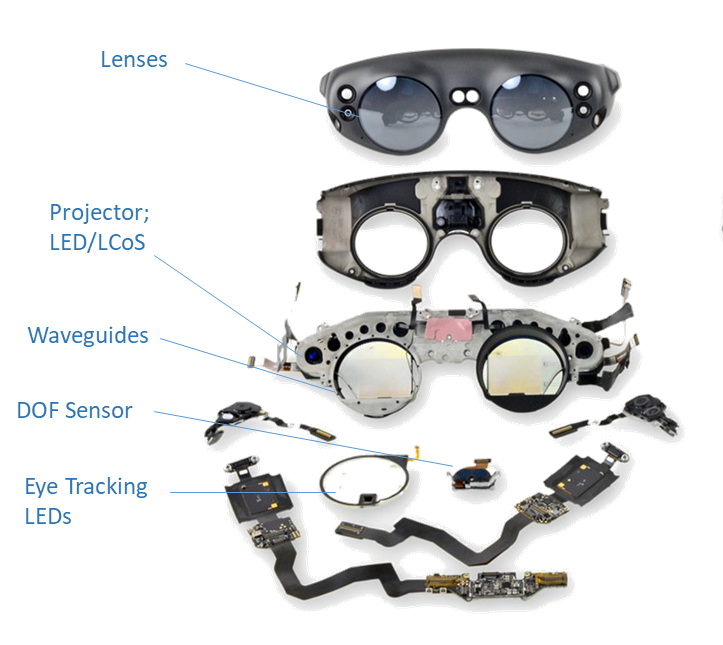

Certain elements are common among almost all XR devices, however, enabling them to serve the essential purpose of presenting digital images to the wearer. Referred to as near-eye devices (NEDs) or head-mounted devices (HMDs), their designs typically include lenses, a light engine, a display mechanism, a power supply/battery, a computational processor, and input devices such as a camera and sensors, even if the specifics of each component may vary widely from one device to the next. For example, AR/MR devices often use waveguides as a display combiner but can employ an almost limitless variety of waveguide structures, configurations, and combinations.

Optical components of the Magic Leap One MR device. (Image source: IFIXIT)

Beyond the core functional elements, additional components may also be included such as microphones and audio speakers, control buttons or touchpads, and USB ports. Cameras may be used for device input and can also provide photo capability for the user. Multiple types of sensors might be incorporated, such as a gyroscope, magnetometer, altitude and humidity sensors, accelerometer, and near-infrared (NIR) 3D sensing emitters/receivers.

Hardware components of the Vuzix Blade smart glasses (Image © Vuzix)

AR/VR/MR Components

Each of the many hardware components in an XR device presents different design, quality, and performance challenges that must be solved with careful design and testing. The remainder of this post will take a look at some of the core optical components of AR/MR smart glasses and the visual quality challenges they may present.

The array of components in Microsoft’s HoloLens 2 MR glasses (Image © Microsoft, Source)

Computer Processor

AR/MR devices have been referred to as “wearable computers,” so, not surprisingly, the central processing unit (CPU) is an essential component. The computing unit is often placed in one of the temples (the arms on each side that curve behind our ears to hold the glasses in place). The processor must be tiny to fit within the temple, for example, Qualcomm’s Snapdragon™ XR1 platform uses the same Qualcomm Snapdragon chipset used in smartphones.3

Lenses

Like normal glasses, smartglasses need a lens. Many AR/MR glasses available on the market today can be fitted with custom lenses to match the wearer’s prescription, provide blue-light filtering for use with computer screens, or include “smart lenses” which can adjust their tint to become sunglasses based on ambient light conditions.

Ray Ban Stories smart sunglasses, which integrate with Facebook, are offered with different lens color choices and a prescription lens option. (Image © Ray Ban)

Optics & Display Module

In AR/MR devices, the picture generating unit (PGU) might include a display module, which can be a microdisplay composed of an OLED, MicroLED, or MicroOLED panel, or a projection system that uses lasers or LED/LCoS. AR/MR devices also include a combiner that, literally, combines light rays from the ambient environment with digital images generated by the device to create the integrated view seen by the wearer.

Waveguides & Lightguides

Waveguides are the most common combiner for AR/MR devices. Thin pieces of glass or plastic, optical waveguides help bend and transmit light. They propagate a light field via the mechanism of total internal reflection (TIR), bouncing light between the inner and outer edges of the waveguide layer, and combining the digital and real-world images into one.

The waveguide layer can have different geometric structures, including reflective, polarized, diffractive, and holographic. The physical properties that help guide the light in these cases could be prisms, mirror arrays, gratings, or metasurfaces, all taking a range of unique forms. While combiners effectively direct light and images into the wearer’s eyes, they can also cause unintended effects including dimming, distortion, double images, or diffraction effects such as color separation or blurring, and the visible edges of the combiner may be visible.4 To avoid these optical defects requires testing and adjustment in the design phase.

Close-up of the Magic Leap One smartglasses, which use a stack of waveguide layers (visible as slight striations on the square element in the center left of the lens). There are 6 waveguide layers—two for each color channel (red, green, blue). The two electronic elements on the right side of the lens are NIR LEDs for sensing. (Image Source: IFixit)

XR Device Visual Quality Challenges

With such a multitude of component structures, XR devices present a complex set of quality testing challenges for device designers and manufacturers. For example, the sheer “number of sensors, and the technology associated with them, combined with the need to operate at low power and be lightweight, is an on-going challenge for the device builders.”5 The complexity of integrated optical, light, and display systems creates a need for sophisticated visual quality testing and measurement.

Various optical components from XR devices used to shape, bend, and transmit light to create images.

Because they are transparent, AR/MR displays can exhibit issues caused by the substrates themselves (such as glass or plastic), by lens films and layers (such as anti-reflective coatings or tints), due to the shape and curvature of the lens, or the formation of elements on substrate layers such as nanostructures that enable light guides. For example, images can appear distorted or with an offset shadow image, called ghosting. Ghosting is typically caused by the image reflecting off both the front and back surfaces of the lens. XR devices with multi-focal, varifocal, and foveated optical designs and new approaches such as liquid lens optics only increase the complexity of visual quality measurement regimens.

Another look at HoloLens 2 components, partially disassembled.

AR/MR images can also present issues such as inconsistent brightness (luminance) or color (chromaticity), incomplete or incorrect shapes, distortion, rotation, and poor contrast against the real-world backdrop. Addressing all these quality considerations for XR devices with unique optical characteristics is a continual challenge for XR device makers. To measure and test devices with differing form factors and specifications—such as focus, angular FOV, or resolution—device makers need a range of visual test capabilities.

Radiant provides effective solutions for test and measurement of XR displays to ensure high-quality visual experiences—as seen from the user's eye position in headsets and smart glasses. Our solutions provide precise, high-resolution measurement using our ProMetric® Imaging Photometers and Colorimeters with up to 61MP resolution, with rapid sequencing of device tests in our TT-ARVR Software.

Radiant offers XR optical test systems (such as our AR/VR Lens) that capture up to 120˚ H FOV for evaluating larger display areas and fully immersive visual experiences. Our solutions (shown below) for testing display modules, lenses and lightguides, near-infrared sensors, and headset architecture ensure that display makers have flexible, end-to-end visual testing capabilities for all component stages of XR devices.

CITATIONS

- Augmented Reality and Virtual Reality Market by Technology and Geography – Forecast and Analysis 2021-2025, Technavio, June 2021.

- Kress, B., “Meeting the optical design challenges of mixed reality,” Electro Optics, January 31, 2019.

- Harfield, J., “How Do Smart Glasses Work?” Make Use Of, July 14, 2021.

- Guttag, K., “AR/MR Optics for Combining Light for a See-Through Display (Part 1),” KGOnTech, October 21, 2016

- Peddie, J., “Technology Issues.” In: Augmented Reality. Springer, Cham. 2017. DOI https://doi.org/10.1007/978-3-319-54502-8_8

Join Mailing List

Stay up to date on our latest products, blog content, and events.

Join our Mailing List